Chinese Scientists Created World’s Fastest Vision Chip for Autonomous Cars

Our eyes are windows to an astonishing world, and the human visual system is the intricate machinery that makes it all possible. With incredible precision and detail, it captures and interprets the world around us, seamlessly processing visual information through a complex network of neural pathways. This allows us to effortlessly recognize objects, navigate our surroundings, and experience the breathtaking beauty of the visual world in all its glory.

The current computer vision technology would pale in comparison. Imagine a self-driving car cruising down a sun-drenched highway, only to be thrown into a panic by a shimmering mirage on the asphalt. Or a robot, tasked with fetching a coffee cup, getting confused by a shadow cast across the table. These are the limitations of current computer vision and sensors – they’re still struggling to see the world with the same adaptability and understanding as a human. While they can process information with lightning speed and pinpoint accuracy, they’re easily fooled by simple tricks of light and shadow, struggling to grasp the nuances of a cluttered, dynamic world. Like a child trying to decipher a complex puzzle, they’re still learning to see the world with the same depth and intuition as their human counterparts.

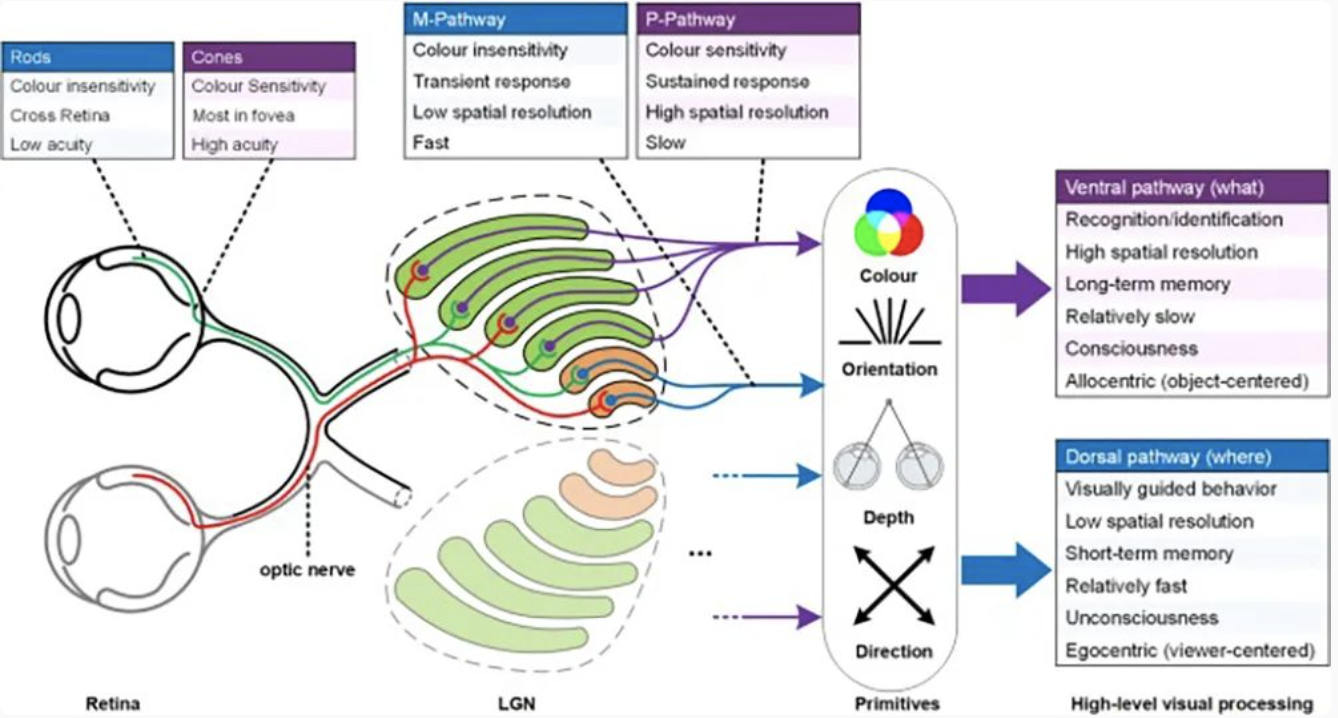

The secret of human visual system lies in its two remarkable pathways that work in harmony to process visual information. These pathways, known as the ventral stream and the dorsal stream, play distinct roles in our perception and interaction with the world.

The ventral stream, often referred to as the “what” pathway, originates in the primary visual cortex (V1) and extends to the inferior temporal cortex. Its primary task is object recognition – the ability to identify and categorize what we see. This pathway meticulously dissects visual input into its fundamental components, such as color, shape, texture, and form. It then weaves these components together to form a coherent representation of objects and scenes, allowing us to make sense of the visual world around us.

On the other hand, we have the dorsal stream, also known as the “where/how” pathway. Like the ventral stream, it originates in V1, but it projects to the posterior parietal cortex. The dorsal stream is responsible for processing vital information about the location and motion of objects in our visual field. It serves as our guide for spatial awareness and actions. By utilizing visual primitives, the dorsal stream constructs representations of the relationships between objects and ourselves. This enables us to swiftly process visual information and, in turn, execute actions like reaching and grasping with remarkable precision.

In a nutshell, the ventral stream takes a “what” approach, deconstructing visual input into its essential features to facilitate object recognition. Conversely, the dorsal stream adopts a “where/how” strategy, utilizing spatial primitives to guide our actions based on the visual information received. Together, these two pathways work in tandem, allowing our visual system to not only recognize objects accurately but also respond swiftly to visual stimuli in our environment.

Now, the burning question remains: could artificial systems ever emulate nature’s crowning achievement and model computer vision after the human visual system’s remarkable prowess?

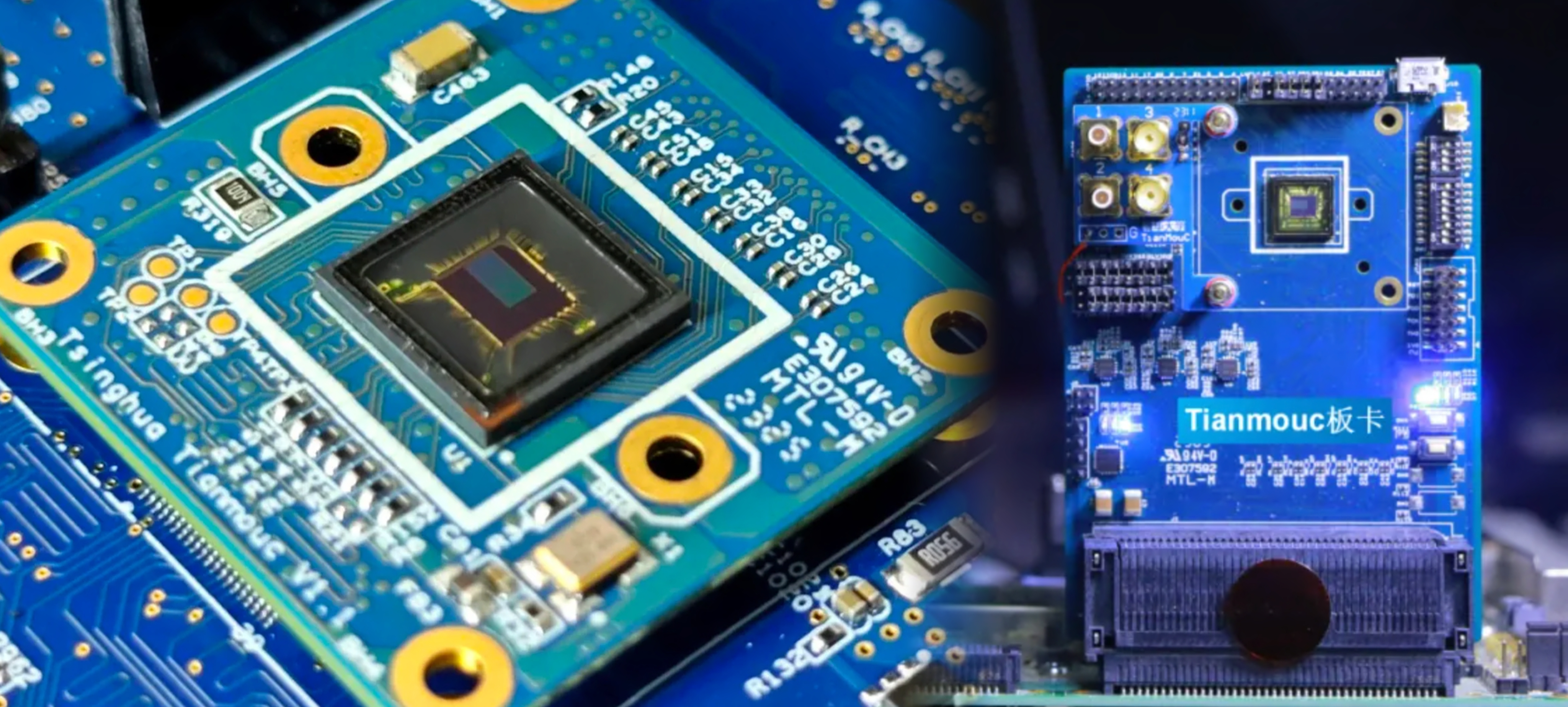

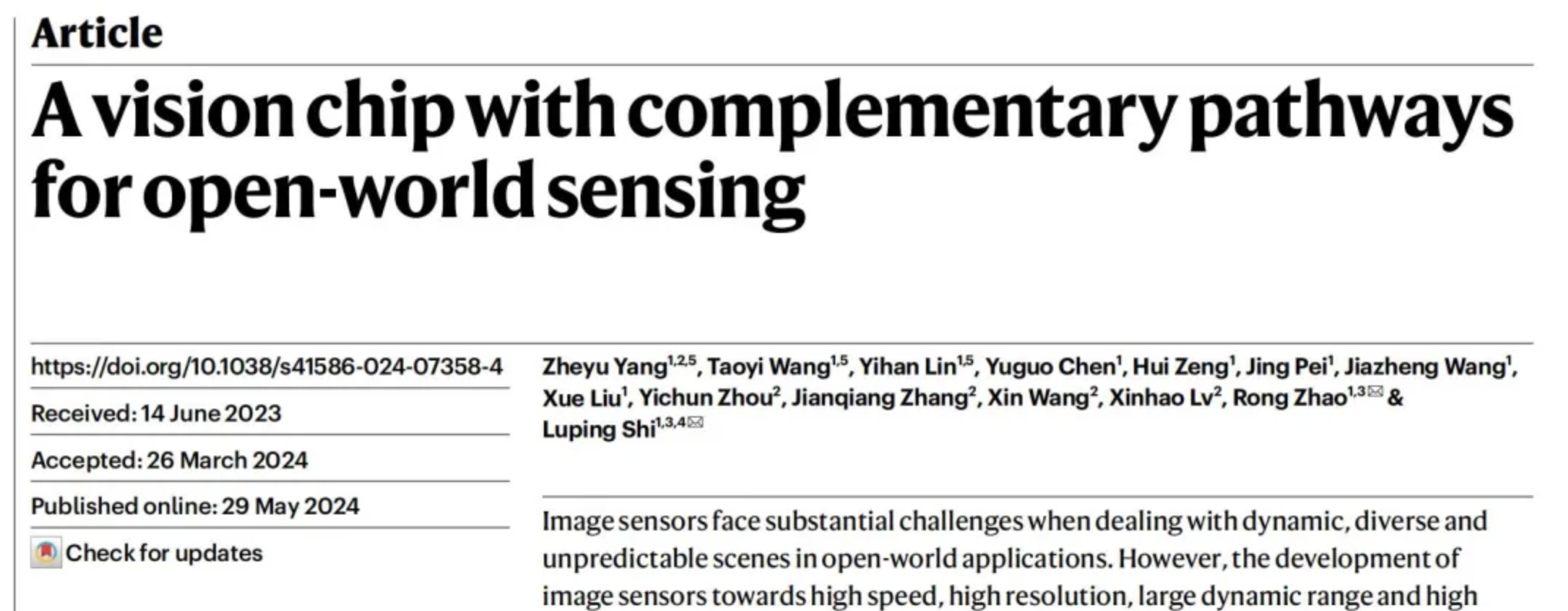

The scientists at China’s Tsinghua University are undoubtedly convinced of this tantalizing possibility. Featured on the cover of Nature, the prestigious scientific journal, their latest achievement marks the first ever vision chip with complementary pathways designed for open-world sensing. In another word, they succeed in replicating human visual system on a microchip that would make robots see the world as we do.

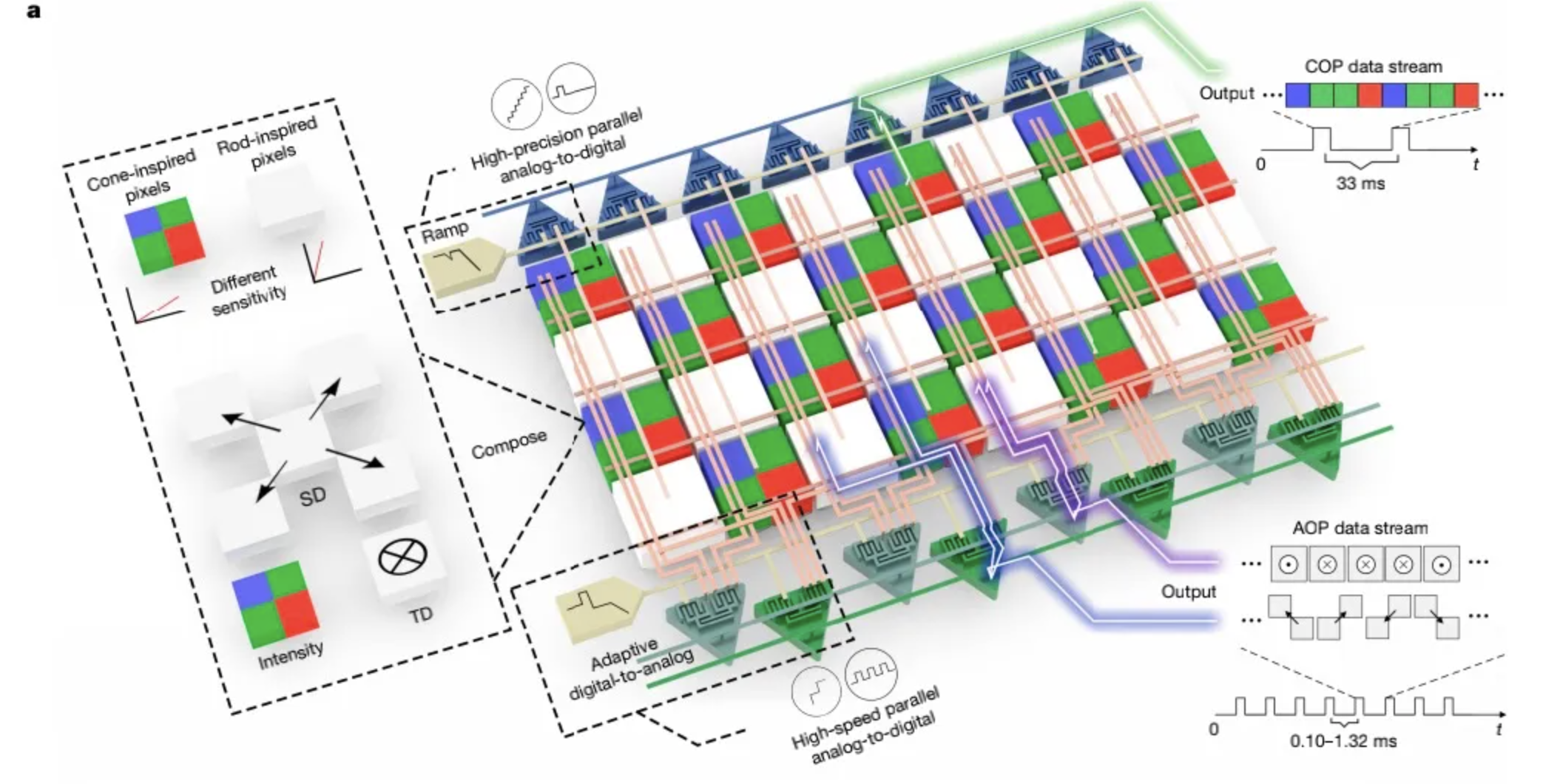

The chip, Tianmouc, literally means “sky’s eye”, employs an innovative hybrid pixel array, elegantly divided into “cone-type pixels” that emulate the cone cells in human eyes to capture color, and “rod-type pixels” that mimic the rod cells for rapid spatiotemporal perception. The entire pixel array is ingeniously back-illuminated, with light fibers entering from the rear, adeptly enhancing photon collection efficiency. While the photosensitive components of the “cone-type” and “rod-type” pixels are similar, comprising photodiodes and transfer gates, the “rod-type” pixels are uniquely integrated with multiple storage units to preserve temporal information at the pixel level, deftly priming for spatiotemporal computing.

Within the Tianmouc chip, two distinct pathways coexist – the cognitive pathway and the action pathway – their readout circuits subtly diverging. The cognitive pathway harnesses high-precision analog-to-digital converters to transform the signals harvested by the cone-type pixels into dense data matrices. In contrast, the action pathway adopts a multi-tier architecture, first reducing the spatiotemporal difference signals emanating from the rod-type pixels, then employing an adaptive quantization circuit to encode them into digital pulse sequences of specified bit-width, thereby adroitly curtailing the data payload.

Capitalizing on the inherent sparsity of the spatiotemporal difference data generated by the action pathway, the chip’s design incorporates an ingenious address-event representation mechanism: meticulously categorizing and packaging the data according to its temporal occurrence, pixel position, positive/negative polarity, and other attributes, to form compact data frames, further optimizing transmission bandwidth.

As a result, Tianmouc is able to quickly acquire visual information, at a rate of 10,000 frames per second with 10-bit precision and a high dynamic range of 130 dB. By comparison, Grove Vision AI V2, currently the most powerful computer vision chip, operates at a rate of 30.3 frames per second.

Tianmouc also reduces the amount of bandwidth needed by 90% and uses very little power. This allows it to overcome the limitations of traditional visual sensing methods and perform well in a variety of extreme scenarios, ensuring the stability and safety of the system.

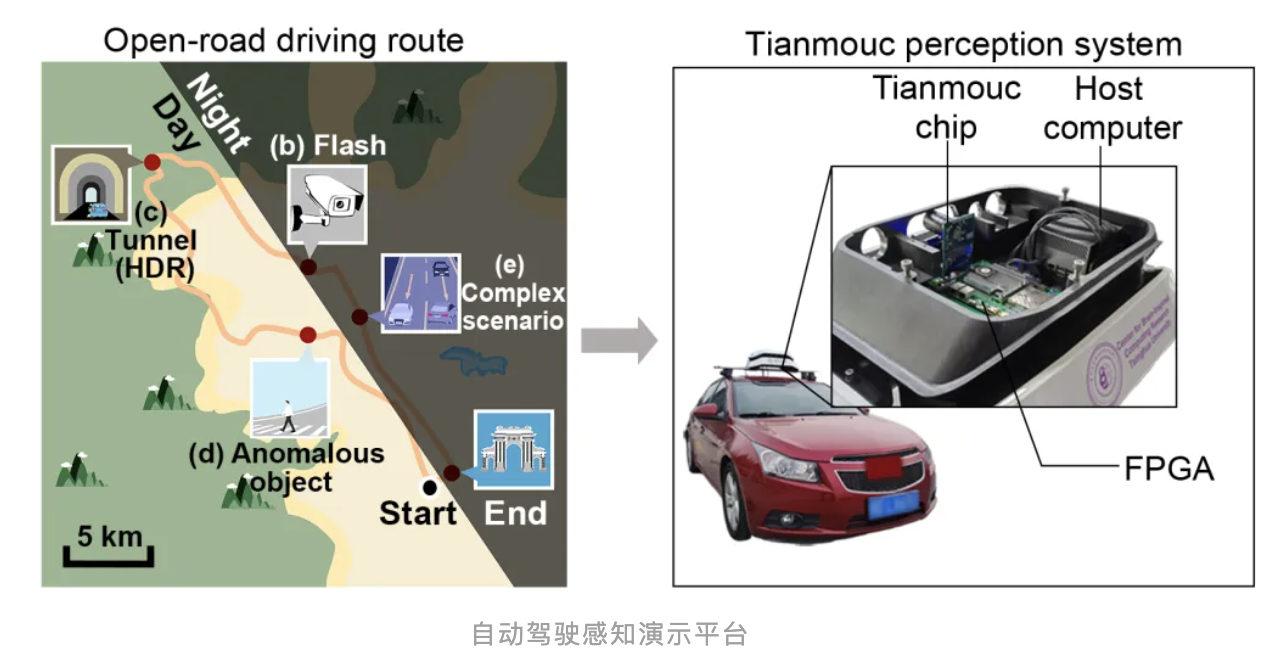

When used in autonomous driving system, the chip is able to sense its surroundings very well in real-world situations. It has been tested in a variety of lighting conditions and complex scenarios, such as glare, tunnels, and the appearance of abnormal objects, and has performed well in all of them. The chip uses special algorithms to ensure that it can accurately sense the environment even when the lighting changes, and it can also suppress over- and under-exposure to provide stable imaging. Moreover, Tianmouc’s high-speed sensing allows it to quickly detect and track moving objects, and it can also recognize non-normal objects such as warning signs.

“The successful development of the Tianmouc represents a significant breakthrough in the field of visual sensing technology,” said Zhao Rong, a professor in the Department of Precision Instruments at Tsinghua University and a co-corresponding author of the paper. “This chip has the potential to revolutionize a wide range of applications, including autonomous driving, embodied intelligence, and more. We are excited to see the impact that it will have in the future.”

https://www.nature.com/articles/s41586-024-07358-4